Round Robin Load Balancing Definition

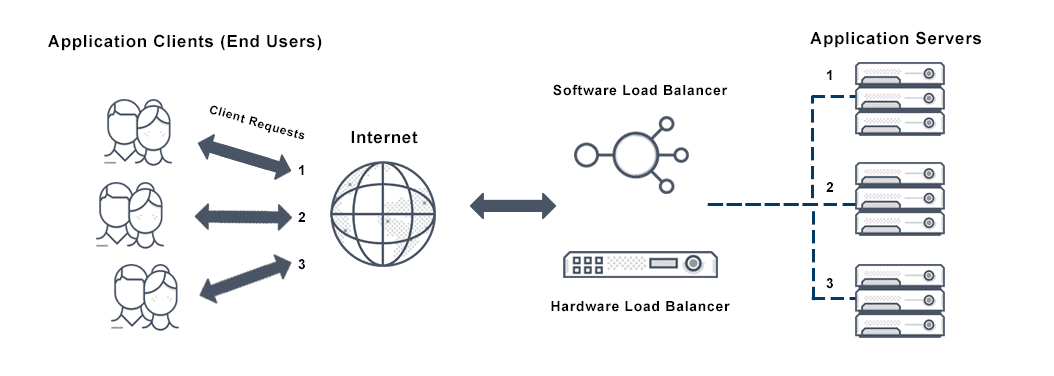

Round robin load balancing is a simple way to distribute client requests across a group of servers. A client request is forwarded to each server in turn. The algorithm instructs the load balancer to go back to the top of the list and repeats again.

What is Round Robin Load Balancing?

Easy to implement and conceptualize, round robin is the most widely deployed load balancing algorithm. Using this method, client requests are routed to available servers on a cyclical basis. Round robin server load balancing works best when servers have roughly identical computing capabilities and storage capacity.

How Does Round Robin Load Balancing Work?

In a nutshell, round robin network load balancing rotates connection requests among web servers in the order that requests are received. For a simplified example, assume that an enterprise has a cluster of three servers: Server A, Server B, and Server C.

• The first request is sent to Server A.

• The second request is sent to Server B.

• The third request is sent to Server C.

The load balancer continues passing requests to servers based on this order. This ensures that the server load is distributed evenly to handle high traffic.

What is the Difference Between Weighted Load Balancing vs Round Robin Load Balancing?

The biggest drawback of using the round robin algorithm in load balancing is that the algorithm assumes that servers are similar enough to handle equivalent loads. If certain servers have more CPU, RAM, or other specifications, the algorithm has no way to distribute more requests to these servers. As a result, servers with less capacity may overload and fail more quickly while capacity on other servers lie idle.

The weighted round robin load balancing algorithm allows site administrators to assign weights to each server based on criteria like traffic-handling capacity. Servers with higher weights receive a higher proportion of client requests. For a simplified example, assume that an enterprise has a cluster of three servers:

• Server A can handle 15 requests per second, on average

• Server B can handle 10 requests per second, on average

• Server C can handle 5 requests per second, on average

Next, assume that the load balancer receives 6 requests.

• 3 requests are sent to Server A

• 2 requests are sent to Server B

• 1 request is sent to Server C.

In this manner, the weighted round robin algorithm distributes the load according to each server’s capacity.

What is the Difference Between Load Balancer Sticky Session vs. Round Robin Load Balancing?

A load balancer that keeps sticky sessions will create a unique session object for each client. For each request from the same client, the load balancer processes the request to the same web server each time, where data is stored and updated as long as the session exists. Sticky sessions can be more efficient because unique session-related data does not need to be migrated from server to server. However, sticky sessions can become inefficient if one server accumulates multiple sessions with heavy workloads, disrupting the balance among servers.

If sticky load balancers are used to load balance round robin style, a user’s first request is routed to a web server using the round robin algorithm. Subsequent requests are then forwarded to the same server until the sticky session expires, when the round robin algorithm is used again to set a new sticky session. Conversely, if the load balancer is non-sticky, the round robin algorithm is used for each request, regardless of whether or not requests come from the same client.

What is the Difference Between Round Robin DNS vs. Load Balancing?

Round robin DNS uses a DNS server, rather than a dedicated hardware load balancer, to load balance using the round robin algorithm. With round robin DNS, each website or service is hosted on several redundant web servers, which are usually geographically distributed. Each server hands out a unique IP address for the same website or server. Using the round robin algorithm, the DNS server rotates through these IP addresses, balancing the load between the servers.

What is the Difference Between DNS Round Robin vs. Network Load Balancing?

As mentioned above, round robin DNS refers to a specific load balancing mechanism with a DNS server. On the other hand, network load balancing is a generic term that refers to network traffic management without elaborate routing protocols like the Border Gateway Protocol (BGP).

What is the Difference Between Load Balancing Round Robin vs. Least Connections Load Balancing?

With least connections load balancing, load balancers send requests to servers with the fewest active connections, which minimizes chances of server overload. In contrast, round robin load balancing sends requests to servers in a rotational manner, even if some servers have more active connections than others.

What Are the Benefits of Round Robin Load Balancing?

The biggest advantage of round robin load balancing is that it is simple to understand and implement. However, the simplicity of the round robin algorithm is also its biggest disadvantage, which is why many load balancers use weighted round robin or more complex algorithms.

Does Avi Networks Offer Round Robin Load Balancing?

Yes, enterprises can configure round robin load balancing with Avi Networks. Round robin algorithm is most commonly used when conducting basic tests to ensure that new load balancers are correctly configured.

For more on the actual implementation of load balancers, check out our Application Delivery How-To Videos.

For more information see the following round robin load balancing resources: