Server Load Balancing Definition

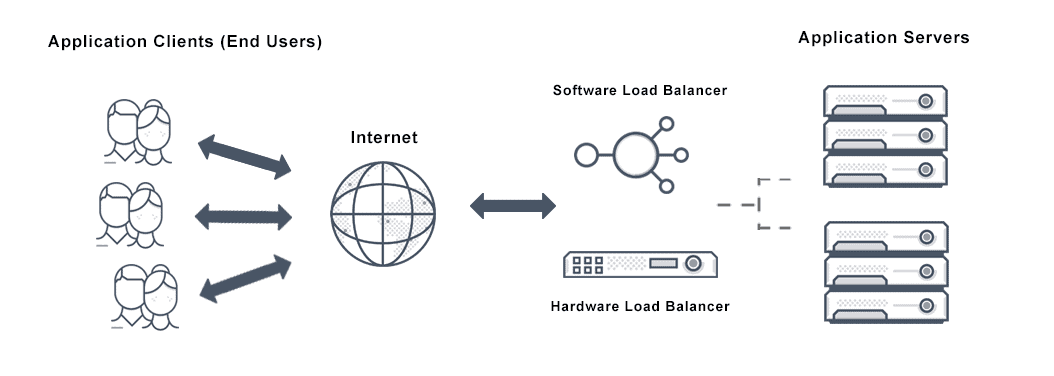

Server Load Balancing (SLB) is a technology that distributes high traffic sites among several servers using a network-based hardware or software-defined appliance. And when load balancing across multiple geo locations, the intelligent distribution of traffic is referred to as global server load balancing (GSLB). The servers can be on premises in a company’s own data centers, or hosted in a private cloud or the public cloud.

Server load balancers intercepts traffic for a website and reroutes that traffic to servers.

What is Server Load Balancing?

Server Load Balancing (SLB) provides network services and content delivery using a series of load balancing algorithms. It prioritizes responses to the specific requests from clients over the network. Server load balancing distributes client traffic to servers to ensure consistent, high-performance application delivery.

Server load balancing ensures application delivery, scalability, reliability and high availability.

How does Server Load Balancing Work?

Server load balancing works within two main types of load balancing:

• Transport-level load balancing is a DNS-based approach which acts independently of the application payload.

• Application-level load balancing uses traffic load to make balancing decisions such as with windows server load balancing.

What are the Advantages of Server Load Balancing?

Distributing incoming network traffic through web server load balancers across multiple servers aims to increase efficiency of application delivery to end users for a reliable application experience. IT teams are increasingly relying on server load balancers to:

• Increase Scalability: load balancers are able to spin up or down server resources based on spikes in traffic to the pool of servers that are best suited to handle these increases in traffic and keep applications performance optimized.

• Redundancy: Using multiple web servers to deliver applications or websites provides a safeguard against the inevitable hardware failure and application downtime. When server load balancers are in place they can automatically transfer traffic to working servers from servers that go down with little to no impact on the end user.

• Maintenance and Performance: Business with web servers distributed across multiple locations and a variety of cloud environments can schedule maintenance at any time to improve performance with minimal impact on application uptime as server load balancers can redirect traffic to resources that are not undergoing maintenance.

What is the Difference Between HTTP Server Load Balancing and TCP Load Balancing?

HTTP server load balancing is a simple HTTP request/response architecture for HTTP traffic. But a TCP load balancer is for applications that do not speak HTTP. TCP load balancing can be implemented at layer 4 or at layer 7. An HTTP load balancer is a reverse proxy that can perform extra actions on HTTPS traffic.

Does Avi offer Server Load Balancing?

Yes. Avi Networks delivers modern, multi-cloud load balancing, including an entirely innovative way of handling local and global server load balancing for enterprise customers across the data center and clouds.

This capability delivers:

• Active / standby data center traffic distribution

• Active / active data center traffic distribution

• Geolocation database and location mapping

• Consistency across data centers

• Rich visibility and metrics for all transactions

For more on the actual implementation of load balancers, check out our Application Delivery How-To Videos.

For more information on server load balancing see the following resources: