Content Caching Definition

Content caching is a performance optimization mechanism in which data is delivered from the closest servers for optimal application performance. For example, content from a single server can be copied and distributed across various data centers and clouds. When that content needs to be accessed, the content can be retrieved from any of the cached locations based on geographic location, performance, and bandwidth availability.

Content Caching FAQs

What is Content Caching?

Page load time directly impacts user experience (UX) and conversions. Users can face major delays when loading content if an application or website can only retrieve content from a single origin server. Poor performance often occurs as the server waits for images, videos, text and documents to be retrieved from distant, back-end servers and travel across junctions.

Content caching is a mechanism for optimizing application performance and overcoming this difficulty. Clients can experience much faster response times to follow up requests for documents that were cached.

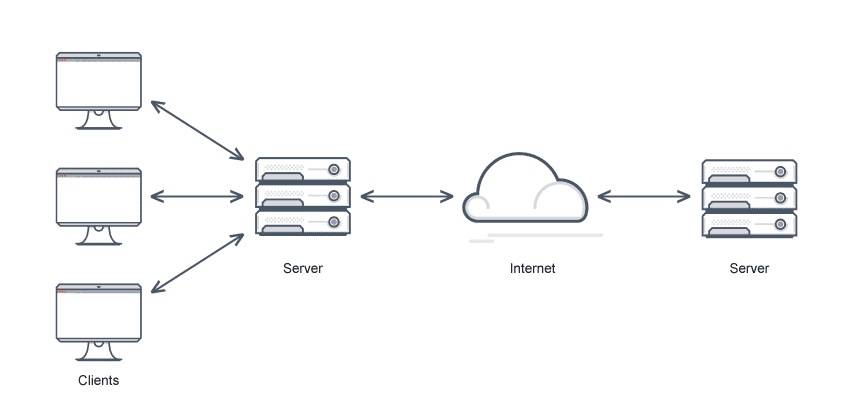

Data and content from a single server can be duplicated and distributed across multiple clouds and data centers so it can be retrieved by users from the closest servers. Content caching from various locations can be based on geographic location, but also bandwidth availability or performance.

To reduce stress on the origin server and page load time, developers use web browser caches and distributed content caching servers managed by commercial content delivery networks (CDNs). This is called content delivery network caching or CDN caching.

A cache in computing is a high-speed layer for storage of transient data. It allows future requests for the cached data to be served up faster by providing a more optimized storage location. Caching enables users to reuse previously computed or retrieved data efficiently.

Typically, cached data is stored in fast access hardware such as random-access memory (RAM) and may be used with software. The main purpose of a cache is to reduce the need to access the slower storage layer to enhance data retrieval performance.

In contrast to databases which generally retain durable, complete data, caches usually store a subset of data temporarily, trading capacity to gain speed.

Apply content caching on network layers including CDN and DNS, and throughout various layers of technology including databases, operating systems, and web applications. Content caching can significantly improves IOPS and reduce latency for many read-heavy application workloads, such as gaming, Q&A portals, social networking, and other forms of shared content. Cached information may include API requests/responses, computationally intensive calculations, database query results, and web artifacts such as JavaScript, HTML, and image files. Content caching also benefits high-performance computing simulations, recommendation engines and other compute-intensive, data-heavy workloads.

In a distributed computing environment, data can span multiple content caches and be stored in a central location for the benefit of all users.

Example of Content Caching

Amazon subscribers in any location might place a number of demands on their subscription. They will stream their favorite show and demand fast access and minimum buffering time, and shop and expect Prime shipping times in their location, for example. To ensure content caching works, Amazon does things like copy streaming videos from origin servers to caching servers all over the world and moving inventory stocks the same way.

Using the distributed network this way for digital content is pushing content to the edge.

Why Use Content Caching?

There are many reasons to use content caching:

Faster site, application performance. Serves cached content of all kinds and static content at the same speed, reducing latency. Reading data from in-memory cache offers extremely fast data access and improves the overall performance of the website or application. In addition to lower latency, in-memory systems increase read throughput or request rates—formally known as input/output operations per second or IOPS—compared to disk-based databases.

Predictable performance. Spikes in usage are a common challenge. For example, social media apps on election day or during the Super Bowl, or eCommerce websites on Cyber Monday or Black Friday. Increased database load causes higher latencies and unpredictable overall application performance. High throughput in-memory cache helps solve these problems.

Added capacity. Taking repetitive tasks away from your origin servers provides more capacity for applications to serve more users.

Eliminate database hotspots. Small subsets of data create hotspots that are accessed more frequently in many applications—for example a trending product or celebrity profile. Storing the most commonly accessed data in an in-memory cache provides fast and predictable performance while mitigating the need to overprovision.

Availability. Serves up cached content when origin servers fail to protect users from catastrophic errors.

Why is Content Delivery Network (CDN) Caching?

A content delivery network caches videos, images, webpages, and other content in proxy servers. Proxy servers are closer to end users than to receive requests from clients and pass them along to other destinations. CDN dynamic content caching can deliver content more rapidly because the servers are closer to the requesting users.

The CDN reduces response time and increases throughput by using the edge location nearest to the originating request location. A CDN cache hit happens when a user makes a successful request to a cache for content the cache has saved. A cache hit allows for faster loading of content since the CDN can deliver it immediately.

A CDN cache miss happens when the requested content is not saved. A cache miss causes the CDN server to pass the request along to the origin server which responds, and this also allows later requests to result in a cache hit.

There are other kinds of caching. For example, DNS servers cache recent DNS queries so they can instantly reply with domain IP addresses. And search engines may cache websites that are often in SERPs to respond to user queries even when the websites themselves cannot respond.

Does Avi Offer Content Caching Solutions?

Yes. Avi Vantage can cache content, enabling reduced workloads for both Avi Vantage and servers and faster page load times for clients. When a server sends a response, Avi Vantage can add the object to its cache and serve it to subsequent clients that make the same request. In this way, caching can reduce the number of requests and connections sent to the server.

Enabling content caching and compression allows Avi Vantage to compress text-based objects and store both the original uncompressed and compressed versions in the cache. Avi Vantage serves subsequent client requests that support compression from the cache—greatly reducing the compression workload because there is no need to compress every object every time.

For more on the actual implementation of load balancers, check out our Application Delivery How-To Videos.