Microservices Definition

Microservices is an architectural design for building a distributed application using containers. They get their name because each function of the application operates as an independent service. This architecture allows for each service to scale or update without disrupting other services in the application. A microservices framework creates a massively scalable and distributed system, which avoids the bottlenecks of a central database and improves business capabilities, such as enabling continuous delivery/deployment applications and modernizing the technology stack.

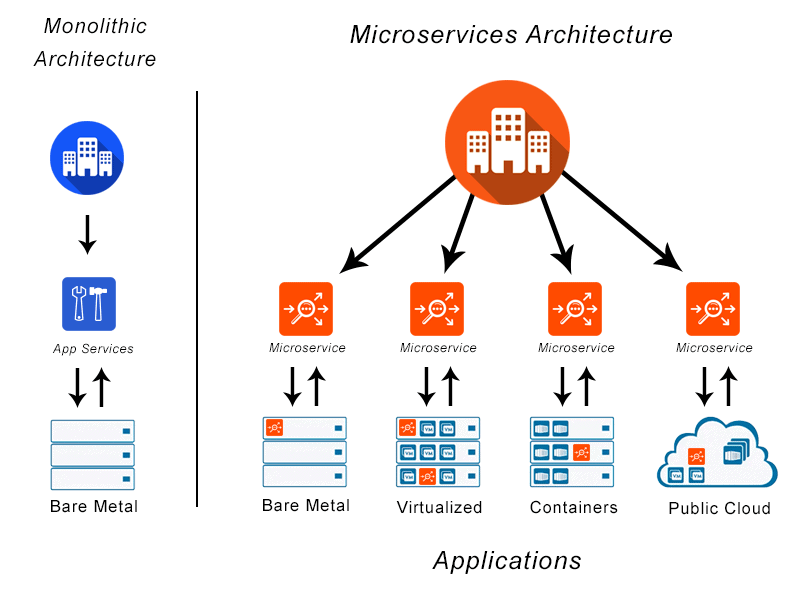

What Is Microservices Architecture?

Microservices architecture treats each function of an application as an independent service that can be altered, updated or taken down without affecting the rest of the application.

Applications were traditionally built as monolithic pieces of software. Adding new features requires reconfiguring and updating everything from process and communications to security within the application. Traditional monolithic applications have long lifecycles, are updated infrequently and changes usually affect the entire application. This costly and cumbersome process delays advancements and updates in enterprise application development.

The architecture was designed to solve this problem. All services are created individually and deployed separately from one another. This architectural style allows for scaling services based on specific business needs. Services can also be rapidly changed without affecting other parts of the application. Continuous delivery is one of the many advantages of microservices.

Microservices architecture has the following attributes:

• Application is broken into modular, loosely coupled components

• Application can be distributed across clouds and data centers

• Adding new features only requires those individual microservices to be updated

• Network services must be software-defined and run as a fabric for each microservice to connect to

When to Use Microservices?

Ultimately, any size company can benefit from the use of a microservices architecture if they have applications that need frequent updates, experience dynamic traffic patterns, or require near real-time communication.

Who Uses Microservices?

Social media companies like Facebook and Twitter, retailers like Amazon, media provider like Netflix, ride-sharing services like Uber and Lyft, and many of the world’s largest financial services companies all use microservices. The trend has seen enterprises moving from a monolithic architecture to microservices applications, setting new standards for container technology and proving the benefits of using this architectural design.

How Are Microservices Deployed?

Deployment of microservices requires the following:

• Ability to scale simultaneously among many applications, even when each service has different amounts of traffic

• Quickly building microservices which are independently deployable from others

• Failure in one microservice must not affect any of the other services

Docker is a standard way to deploy microservices using the following steps:

• Package the microservice as a container image

• Deploy each service instance as a container

• Scaling is done based on changing the number of container instances

Using Kubernetes with an orchestration system like Docker in deployment allows for management of a cluster of containers as a single system. It also lets enterprises run containers across multiple hosts while providing service discovery and replication control. Large scale deployments often rely on Kubernetes.

How Do Microservices Scale?

Microservice architectures allow organizations to divide applications into separate domains managed by individual groups. This is key for building highly scaled applications. This is one of the key reasons why businesses turn to public cloud to deliver microservices applications as on-prem infrastructure is more optimized for legacy monolithic applications, although it’s not necessarily the case. A new generation of technology vendors set out to provide solutions for both. The separation of responsibilities fosters independent work on individual services, which has no impact on developers in other groups working on the same application.

Does VMware NSX Advanced Load Balancer Provide Microservices?

VMware NSX Advanced Load Balancer provides a centrally orchestrated, container ingress with dynamic load balancing, service discovery, security, micro-segmentation for both north/south and east/west traffic, and analytics for container-based applications running in OpenShift and Kubernetes environments.

VMware NSX Advanced Load Balancer provides a container application networking platform with two major components:

• Controller: A cluster of up to three nodes that provide the control, management and analytics plane for microservices. Controller communicates with a container management platform such as OpenShift for Kubernetes, deploys and manages Service Engines, configures services on all Service Engines and aggregates telemetry data from Service Engines to form an application map.

• Service Engines: A service proxy deployed on every Kubernetes node providing the application services in the dataplane and reporting real-time telemetry data to the Controller.

For more on the actual implementation of load balancing, security applications and web application firewalls check out our Application Delivery How-To Videos.

For more information on multi-cloud see the following resources: